Problem: The business dealership information is being captured on multiple external systems. There is a need for business to capture 360° data view dashboard to keep track of information of dealers, sales data, and many other dealership information at one place. And this dashboard is for business users on the organization for monitoring.

Let us take an example of dealership architecture, where dealer identities/domain information are stored on external Azure AD, and basic details of dealership is stored on Azure Cosmos database.

Note: The dealership use case is an example for us to explore the possibilities. Similarly, this can be replaced with any other data model.

The following elements/components are considered for building this solution.

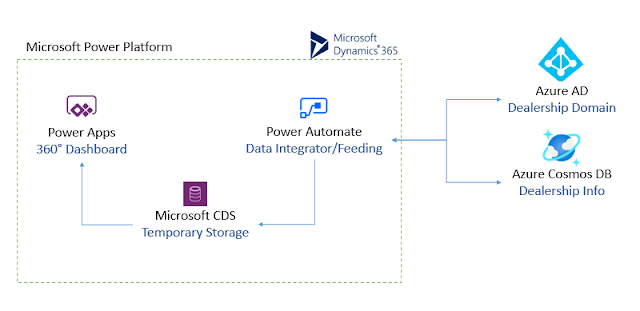

The following shows high level design of 360 degree architecture, integrating data from multiple systems.

The following illustrates the design.

Now let us get deeper into the solution, to see how these are configured.

Let us take an example of dealership architecture, where dealer identities/domain information are stored on external Azure AD, and basic details of dealership is stored on Azure Cosmos database.

Note: The dealership use case is an example for us to explore the possibilities. Similarly, this can be replaced with any other data model.

Design & Solution Considerations

The following elements/components are considered for building this solution.

- Azure Cosmos Database, which holds dealership’s basic information

- Azure Active Directory, which holds the domain/identity information of dealership users [This is external/separate domain, holding only dealership users]

- Power Automate, to integrate and push the data to CRM system.

- Microsoft Common Data Service, which acts as intermediate storage and containing subsets of information from two other systems. [Azure Cosmos DB and Azure AD]

- Power Apps – model driven app, which has necessary dashboard for business users.

The following shows high level design of 360 degree architecture, integrating data from multiple systems.

|

| High level design for Dashboard providing 360 degree of dealership data |

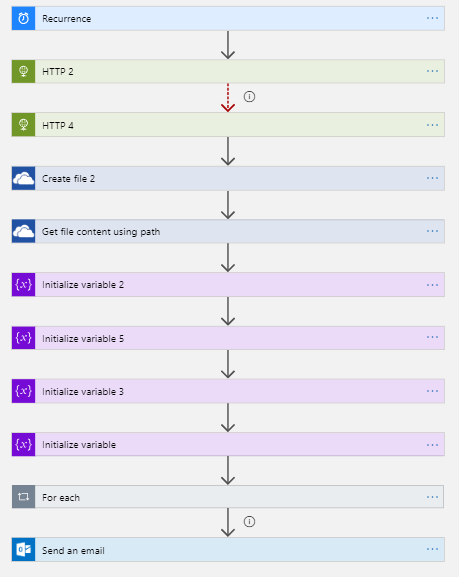

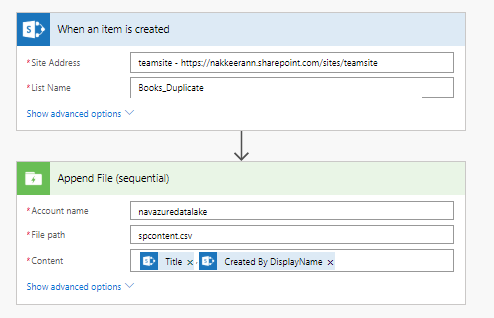

The following illustrates the design.

- The necessary data model or entities are created on Microsoft CDS to capture the data from multiple system. For now in this usecase, let us focus on one entity, I.e., dealers.

- The flow configured on Power Automate runs as a scheduled job, to synchronize the data into CDS. Pulls minimal information from Azure AD and Azure Cosmos database, and synchronize the data subset into Microsoft CDS.

- Power Apps, which has views, forms and dashboards pulls and shows the information from entities, which is configured in the underlying CDS.

Now let us get deeper into the solution, to see how these are configured.