In this article, we will understand how SPFx solutions built for MS Teams and SharePoint portals, could be automatically packaged and uploaded into app catalog portals. This automation is achieved with the help of Azure Devops and Github version control systems. Using devops ensures us the continuous delivery of changes onto the target platforms.

Remember, we will be targeting the webparts of this solution for SharePoint and as tabs for Microsoft Teams. Let us use github repositories as a version control tool for storing the solution. You will understand, how github could be easily integrated with Azure Devops for automating the packaging processes in this article.

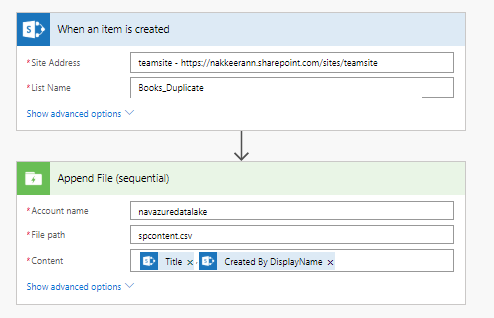

Create the SPFx solution, that is compatible for SharePoint online portals. The SPFx solution (available on the github https://github.com/nakkeerann/globalspfxsoln) contains, the azure-pipeline configuration file template for setting up the build pipelines. The following snapshot shows the configurations for creating SPFx solution.

Push the code into Github using the git commands. For example, below commands are used for my repository.

git initgit add .git commit -m "first commit"git remote add origin https://github.com/nakkeerann/globalspfxsoln.gitgit push -f origin master

Install the Azure Pipelines to your Github account https://github.com/marketplace/azure-pipelines . Select the repositories required to install pipelines. Authorize azure pipelines.